Affiliate links on Android Authority may earn us a commission. Learn more.

Arm vs x86: Instruction sets, architecture, and all key differences explained

The Android operating system is built to run on three different types of processor architecture: Arm, Intel x86, and MIPS. The former is today’s ubiquitous architecture after Intel abandoned its smartphone CPUs, while MIPS processors haven’t been seen for years.

Arm has now become the CPU architecture used in all modern smartphone SoCs, and that’s true for both the Android and Apple ecosystems. Arm processors are also making their way into the PC market via Windows on Arm and Apple’s growing custom Apple Silicon range for Macs. So with the Arm vs Intel CPU war heating up big time, here’s everything you need to know about Arm vs x86.

CPU architecture explained

The Central Processing Unit (CPU) is the “brain” of your device, but it’s not exactly smart. A CPU only works when given very specific instructions — suitably called the instruction set which tells the processor to move data between registers and memory or to perform a calculation using a specific execution unit (such as multiplication or subtraction). Unique CPU hardware blocks require different instructions and these tend to scale up with more complex and powerful CPUs. Desired instructions can also inform hardware design, as we’ll see in a moment.

Applications that run on your phone aren’t written in CPU instructions; that would be madness with today’s large cross-platform apps that run on a variety of chips. Instead, apps written in various higher-level programming languages (like Java or C++) are compiled for specific instruction sets so that they run correctly on Arm, x86, or other CPUs. These instructions are further decoded into microcode ops within the CPU, which requires silicon space and power.

Keeping the instruction set simple is paramount if you want the lowest-power CPU. However, higher performance can be obtained from more complex hardware and instructions that perform multiple operations at once, at the expense of power. This is a fundamental difference between Arm vs x86 and their historical approaches to CPU design.

x86 traditionally targets peak performance, Arm energy efficiency

Arm is RISC (Reduced Instruction Set Computing) based, while x86 is CISC (Complex Instruction Set Computing). Arm’s CPU instructions are reasonably atomic, with a very close correlation between the number of instructions and micro-ops. CISC, by comparison, offers many more instructions, many of which execute multiple operations (such as optimized math and data movement). This leads to better performance but more power consumption in decoding these complex instructions.

That said, the lines between RISC and CISC are a little blurrier these days, with each borrowing ideas from one other and a wide range of CPU cores built on architecture variations. Furthermore, the option to customize Arm’s architecture means that partners, such as Apple, can add their own more complex instructions.

But what’s important to note is that it’s the link between instructions and processor hardware design that makes a CPU architecture. This way, CPU architectures can be designed for different purposes, such as extreme number crunching, low energy consumption, or minimal silicon area. This is a key difference when looking at Arm vs x86 in terms of CPUs, as the former is based on a lower power instruction set and hardware.

Modern 64-bit CPU architectures

Today, 64-bit architectures are mainstream across smartphones and PCs, but this wasn’t always the case. Phones didn’t make the switch until 2012, around a decade after PCs. In a nutshell, 64-bit computing leverages registers and memory addresses large enough to use 64-bit (1s and 0s) long data types. As well as compatible hardware and instructions, you also need a 64-bit operating system too, such as Android.

Industry veterans may remember the hoopla when Apple introduced its first 64-bit processor ahead of its Android rivals. The move to 64-bit didn’t transform day-to-day computing. However, it is important to run math efficiently using high-accuracy floating-point numbers. 64-bit registers also improve 3D rendering accuracy, encryption speed, and simplifies addressing more than 4GB RAM.

Today, both architectures support 64-bit, but it's more recent in mobile

PCs moved to 64-bit well before smartphones, but it wasn’t Intel that coined the modern x86-64 architecture (also known as x64). That accolade belongs to AMD’s announcement from 1999, which retrofitted Intel’s existing x86 architecture. Intel’s alternative IA64 Itanium architecture dropped by the wayside.

Arm introduced its ARMv8 64-bit architecture in 2011. Rather than extend its 32-bit instruction set, Arm offers a clean 64-bit implementation. To accomplish this, the ARMv8 architecture uses two execution states, AArch32 and AArch64. As the names imply, one is for running 32-bit code and one for 64-bit. The beauty of the ARM design is the processor can seamlessly swap from one mode to the other during its normal execution. This means that the decoder for the 64-bit instructions is a new design that doesn’t need to maintain compatibility with the 32-bit era, yet the processor as a whole remains backwardly compatible. However, Arm’s latest ARMv9 Cortex-A processors are now 64-bit only, cutting off support for old 32-bit applications and operating systems on these next-gen CPUs. Moreover, Google also disabled support for 32-bit apps in the firmware of the Pixel 7.

Arm’s Heterogeneous Compute won over mobile

The architectural differences discussed above partly explain the current successes and issues faced by the two chip behemoths. Arm’s low power approach is perfectly suited to the sub-5W Thermal Design Power (TDP) requirements of mobile, yet performance scales up to match Intel’s laptop chips too. See Apple’s M1 series of Arm-based processors that are providing serious competition in the PC space. Meanwhile, Intel’s 100W-plus TDP Core i7 and i9 products, along with rival chipsets from AMD Ryzen, win big in servers and high-performance desktops, but historically struggle to scale down below 5W. See the dubious Atom lineup.

Of course, we mustn’t forget the role that silicon manufacturing processes have played in vastly improving power efficiency over the past decade either. Broadly speaking, smaller CPU transistors consume less power. Intel’s 7nm CPUs (dubbed Intel 4 process technology) aren’t expected until 2023, and those may be built by TSMC rather than Intel’s foundries. In that time, smartphone chipsets have moved from 20nm to 14, 10, and 7nm, 5nm, and now 4nm designs on the market as of 2022. This has been achieved simply by leveraging competition between Samsung and TSMC foundries. This has also partly helped AMD close the gap on its x86-64 rival with its latest 7nm and 6nm Ryzen processors.

However, one unique feature of Arm’s architecture has been particularly instrumental in keeping TDP low for mobile applications — heterogeneous compute. The idea is simple enough, build an architecture that allows different CPU parts (in terms of performance and power) to work together for improved efficiency.

Arm's ability to share workloads across high- and low-performance CPU cores is a boon for energy efficiency

Arm’s first stab at this idea was big.LITTLE back in 2011 with the big Cortex-A15 and little Cortex-A7 core. The idea of using bigger out-of-order CPU cores for demanding applications and power-efficient in-order CPU designs for background tasks is something smartphone users take for granted today, but it took a few attempts to iron out the formula. Arm built on this idea with DynamIQ and the ARMAv8.2 architecture in 2017, allowing different CPUs to sit in the same cluster, sharing memory resources for far more efficient processing. DynamIQ also enables the 2+6 CPU design that’s common in mid-range chips, as well as the little, big, bigger (1+3+4 and 2+2+4) CPU setups seen in flagship-tier SoCs.

Related: Single-core vs multi-core processors: Which are better for smartphones?

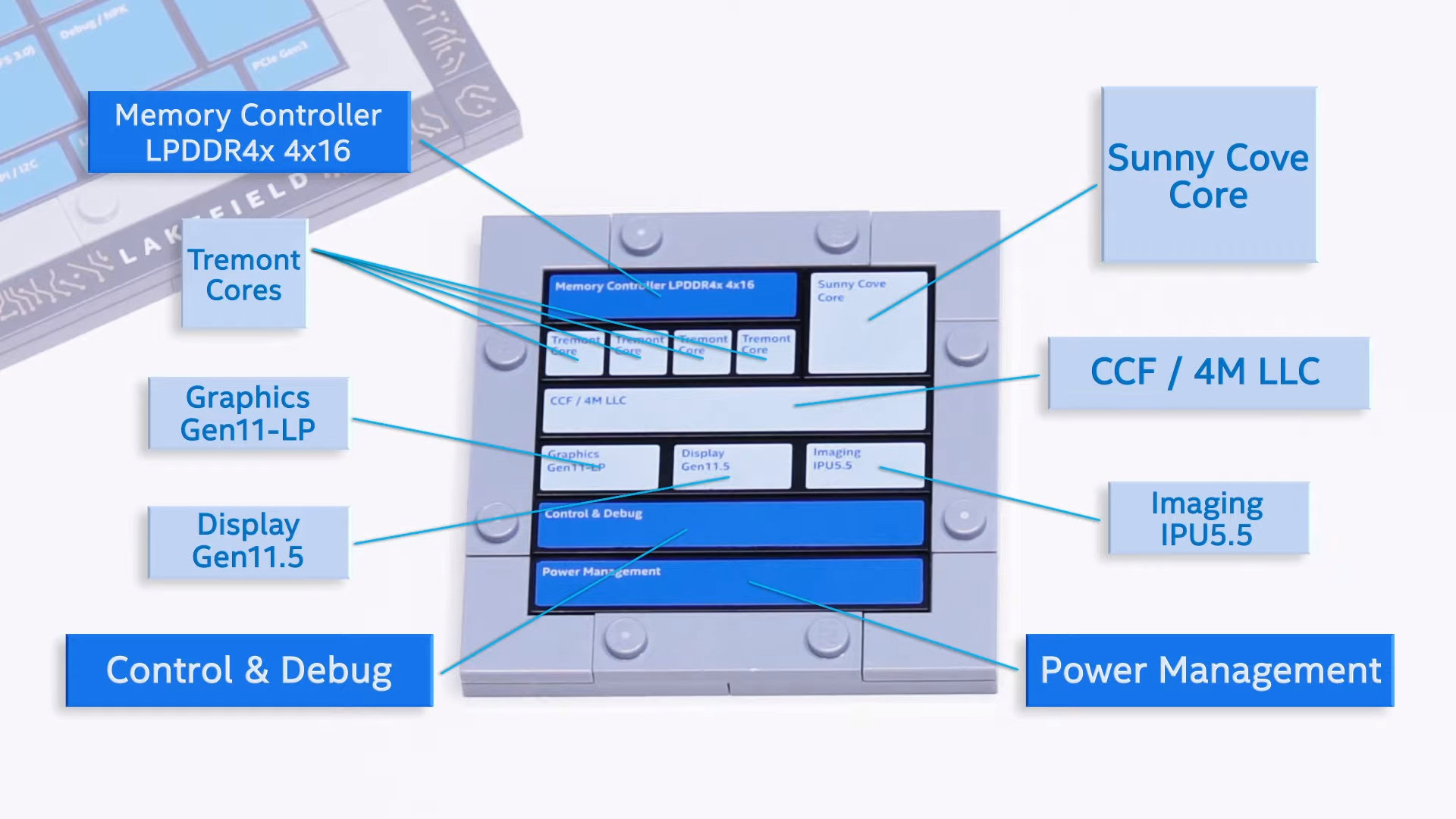

Intel’s rival Atom chips, sans heterogeneous compute, couldn’t match Arm’s balance of performance and efficiency. It took until 2020 for Intel’s Foveros, Embedded Multi-die Interconnect Bridge (EMIB), and Hybrid Technology projects to yield a competing chip design — the 10nm Lakefield. Lakefield combines a single, high-performance Sunny Cove core with four power-efficient Tremont cores, along with graphics and connectivity features. However, even this package is targeted at connected laptops with a 7W TDP, which is still too high for smartphones.

Today, Arm vs x86 is increasingly fought in the sub-10W TDP laptop market segment, where Intel scales down and Arm scales up increasingly successfully. Apple’s switch to its own custom Arm chips for Mac is a prime example of the growing performance reach of the Arm architecture, thanks in part to heterogeneous computing along with custom optimizations made by Apple.

Custom Arm cores and instruction sets

Another important distinction between Arm and Intel is that the latter controls its whole process from start to finish and sells its chips directly. Arm simply sells licenses. Intel keeps its architecture, CPU design, and even manufacturing entirely in-house. Although that latter point may change as Intel looks to diversify some of its cutting-edge manufacturing. Arm, by comparison, offers a variety of products to partners like Apple, Samsung, and Qualcomm. These range from off-the-shelf CPU core designs like the Cortex-X4 and A720, designs built in partnership through its Arm CXC program, and custom architecture licenses that allow companies like Apple and Samsung to build custom CPU cores and even make adjustments to the instruction set.

Apple builds custom CPUs to extract as much performance-per-watt as possible.

Building custom CPUs is an expensive and involved process but can lead to powerful results when done correctly. Apple’s CPUs showcase how bespoke hardware and instructions push Arm’s performance that rivals mainstream x86-64 and beyond. Although Samsung’s Mongoose cores were less successful and eventually wound down. Qualcomm is also re-entering the custom Arm CPU game, having acquired Nuvia for $1.4 billion.

Apple intends to gradually replace Intel CPUs inside its Mac products with its own Arm-based silicon. The Apple M1 was the first chip in this effort, powering the latest MacBook Air, Pro, and the Mac Mini. The latest M1 Max and M1 Ultra boast some impressive performance improvements, highlighting that high-performance Arm cores can take on x86-64 in more demanding compute scenarios.

At the time of writing, the world's most powerful supercomputer, Fugaku, runs on Arm

The x84-64 architecture used by Intel and AMD remains out in front in terms of raw performance in the consumer hardware space. But Arm is now very competitive in product segments where high performance and energy efficiency remain key, which includes the server market. At the time of writing, the world’s most powerful supercomputer is running on Arm CPU cores for the first time ever. Its A64FX SoC is Fujitsu-designed and the first to run the Armv8-A SVE architecture.

Software compatibility

As we mentioned earlier, applications and software have to be compiled for the CPU architecture they run on. The historical marriage between CPUs and ecosystems (such as Android on Arm and Windows on x86) meant compatibility was never really a concern, as apps didn’t need to run across multiple platforms and architectures. However, growth in cross-platform apps and operating systems running on multiple CPU architectures is changing this landscape.

Apple’s Arm-based Macs, Google’s Chrome OS, and Microsoft’s Windows on Arm are all modern examples where software needs to run on both Arm and x86-64 architectures. Compiling native software for both is an option for new apps and developers willing to invest in recompilation. To fill in the gaps, these platforms also rely on code emulation. In other words, translating code compiled for one CPU architecture to run on another. This is less efficient and degrades performance compared to native apps, but good emulation is currently possible to ensure that apps work.

After years of development, Windows on Arm emulation is in a pretty good state for most applications. Similarly, Android apps run on Windows 11 and Intel Chromebooks decently for the most part too. Apple has its own translation tool dubbed Rosetta 2 to support legacy Mac applications. But, all three suffer performance penalties compared to natively compiled apps.

Arm vs x86: The final word

Over the past decade of the Arm vs x86 rivalry, Arm has won out as the choice for low-power devices like smartphones. The architecture is also making strides into laptops and other devices where enhanced power efficiency is in demand. Despite losing out on phones, Intel’s low power efforts have improved over the years too, with hybrid ideas like Alder Lake and Raptor Lake now sharing much more in common with traditional Arm processors found in phones.

That said, Arm and x86 remain distinctly different from an engineering standpoint, and they continue to have individual strengths and weaknesses. However, consumer use cases across the two are becoming blurred as ecosystems increasingly support both architectures. Yet, while there’s crossover in the Arm vs x86 comparison, it’s Arm that is certain to remain the architecture of choice for the smartphone industry for the foreseeable future. The architecture is showing major promise for laptop-class compute and efficiency too.