Affiliate links on Android Authority may earn us a commission. Learn more.

Arm Cortex-X4, A720, and A520: 2024 smartphone CPUs deep dive

Arm unveiled several new technologies during Tech Day 2013, including its ray tracing capable 5th Gen graphics architecture and a trio of new CPU cores – the Cortex-X4, Cortex-A720, and Cortex-A520.

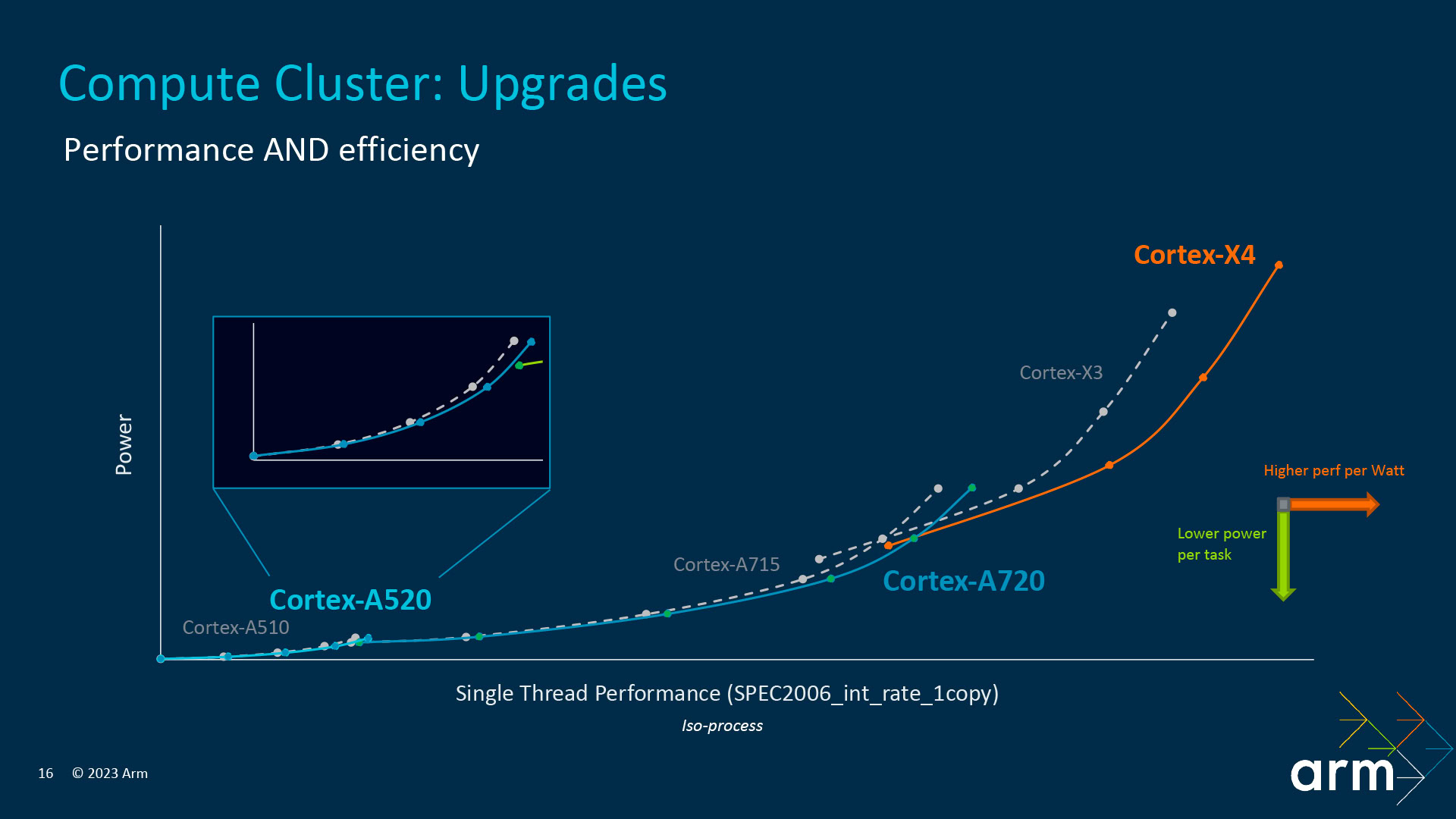

The new cores pick up from 2022’s Cortex-X3 and Cortex-A710 CPUs and 2021’s energy-efficient Cortex-A510. A three-core roadmap remains unique in the CPU space, with Arm targeting high-end, sustainable, and low-power performance points and bundling them together into a single cluster to

To understand what’s new and how this all fits together, we’re diving deep into the inner workings of Arm’s 2023 CPU announcement.

Headline performance improvements

If you’re after a summary of what to expect next year, here are the key numbers (according to Arm).

The Cortex-X4, the fourth-generation high-performance X-series CPU, offers up to 14% more single-thread performance than last year’s Cortex-X3 found in the Snapdragon 8 Gen 2. In Arm’s example, the Cortex-X4 is clocked at 3.4GHz versus 3.25GHz for the X3, all other factors being equal. More importantly, the new core is up to 40% more power efficiency when targeting the same peak performance point as the Cortex-X3, which is a notable win for sustained performance workloads. This all comes in at just under 10% area growth (for the same cache size), with more wins to come from the move to smaller manufacturing nodes.

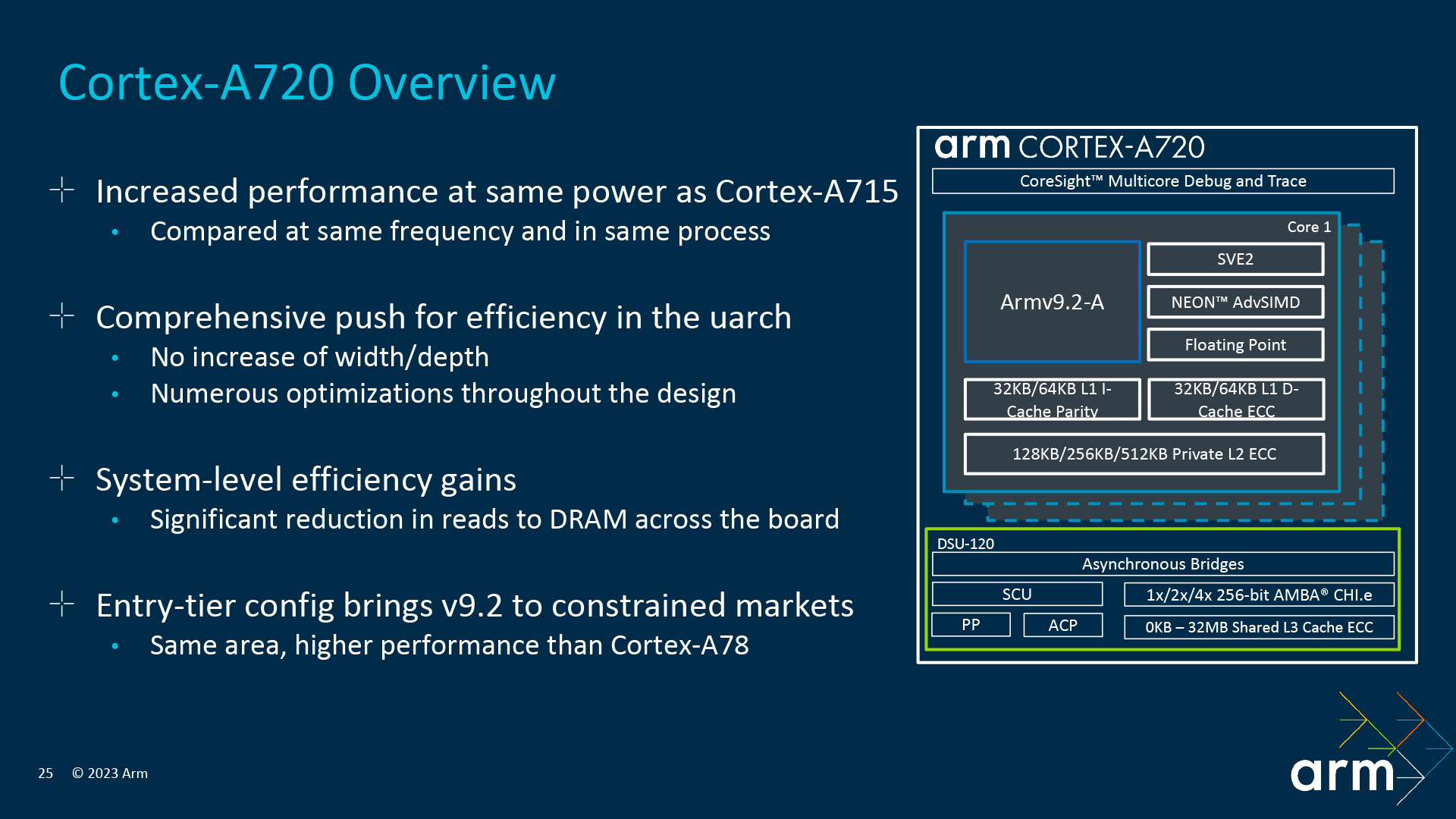

More power efficiency gains are to be found with the middle Cortex-A720 core. It’s 20% more power efficient than last year’s Cortex-A715 when targeting the same performance point on a like-for-like manufacturing basis. Alternatively, the chip can provide 4% more performance for the same power consumption as last year’s core.

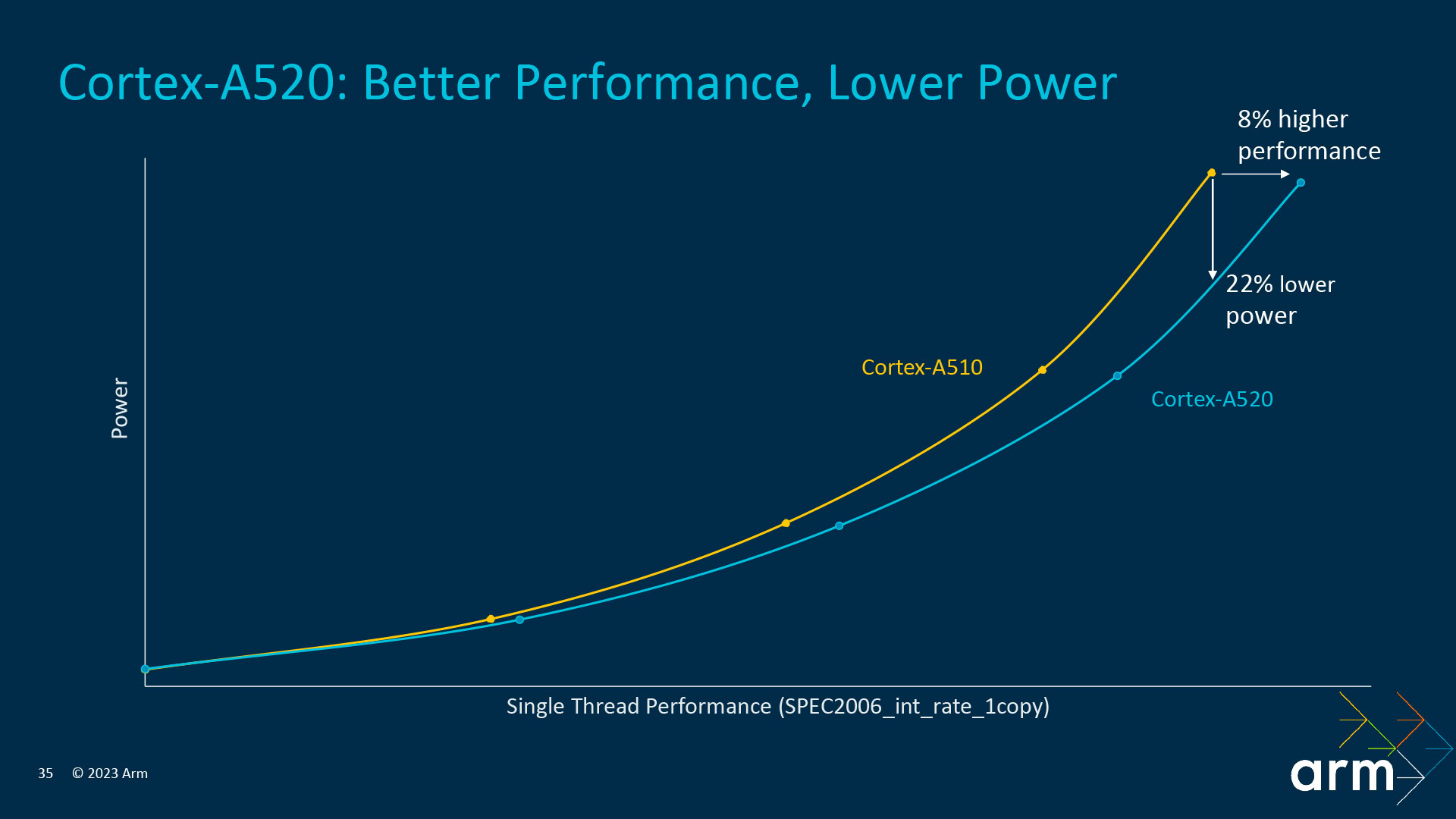

Rounding out Arm’s latest triple CPU portfolio is the Cortex-A520, again boasting double-digit efficiency gains. The core is up to 22% more efficient than 2022’s A510 for the same performance point. Furthermore, according to Arm’s benchmarks, the core can provide up to 8% more performance for the same power consumption. That’s without including gains from the improved manufacturing nodes we expect to see by the end of 2023.

Efficiency is the aim of the game this year, then, but that doesn’t mean any of these new cores are lacking in performance either. Let’s get into the fine details to see how Arm has done it.

Arm Cortex-X4 deep dive

If you’ve followed along with our analysis in years gone by, you’ll have spotted the general trend already. Once more, Arm has gone wider and deeper with the Cortex-X4, allowing the core to do even more per clock cycle at the expense of a slightly bigger silicon footprint (around 10% for the same cache size as last year). Combined with a new 2MB L2 cache option for high-performance workloads, this core is built to fly.

To start with, the out-of-order execution core is bigger this time around. There are now eight ALUs (up from six), an extra branch unit to bring the total to three, and an additional integer MAC unit for good measure. Pipelined floating point divider/sqrt instructions further improve the core number crunching capabilities.

It’s worth pointing out that the two additional ALUs are the single-instruction type for more basic mathematical operations. Likewise, the MAC unit replaces on old mixed-instruction MUL ALU, bringing with it additional capabilities but not adding in a completely new unit. There also don’t appear to have been any changes to the floating point NEON/SVE2 units. So while the core is certainly bigger, leveraging those capabilities does depend on the use case.

| Arm Cortex-X4 | Arm Cortex-X3 | Arm Cortex-X2 | |

|---|---|---|---|

Peak clock speed | Arm Cortex-X4 ~3.4GHz | Arm Cortex-X3 ~3.25GHz | Arm Cortex-X2 ~3.0GHz |

Decode Width | Arm Cortex-X4 10 instructions | Arm Cortex-X3 6 instructions (8 mop) | Arm Cortex-X2 5 instructions |

Dispatch Pipeline Depth | Arm Cortex-X4 10 cycles | Arm Cortex-X3 11 cycles for instructions (9 cycles for mop) | Arm Cortex-X2 10 cycles |

OoO Execution Window | Arm Cortex-X4 768 (2x 384) | Arm Cortex-X3 640 (2x 320) | Arm Cortex-X2 448 (2x 288) |

Execution Units | Arm Cortex-X4 6x ALU 1x ALU/MAC 1x ALU/MAC/DIV 3x Branch | Arm Cortex-X3 4x ALU 1x ALU/MUL 1x ALU/MAC/DIV 2x Branch | Arm Cortex-X2 2x ALU 1x ALU/MAC 1x ALU/MAC/DIV 2x Branch |

L1 cache | Arm Cortex-X4 64KB (assumed) | Arm Cortex-X3 64KB | Arm Cortex-X2 64KB |

L2 cache | Arm Cortex-X4 512KB / 1MB / 2MB | Arm Cortex-X3 512KB / 1MB | Arm Cortex-X2 512KB / 1MB |

Architecture | Arm Cortex-X4 ARMv9.2 | Arm Cortex-X3 ARMv9 | Arm Cortex-X2 ARMv9 |

Key changes are also found at the core’s front end to keep the core fed with things to do. The instruction dispatch width is now 10-wide, a notable upgrade from last year’s 6-instruction/8-mop width. Eagle-eyed readers will have noticed the dedicated mop cache is gone, but more on that in a minute. The instruction pipeline length is now ten deep, a slight change to the 11-instruction/9-mop latency from last year, but it’s pretty much in the same area for stall latency.

The execution window sits at a hefty 768 instructions (384 entries times two fused microOPs) in flight at one time, up from 640. That’s a lot of instructions available for out-of-order optimization, so optimal fetching is essential. Arm says it redesigned the single-instruction cache, leveraging the capabilities from the old separate mop-cache approach with additional fused instructions. Paired with accompanying branch predictors, Arm says the front end has been optimized for applications with large instruction footprints, significantly reducing pipeline stalls for real-world workloads (less so for benchmarks).

A bigger, wider Cortex-X4 means more performance for demanding workloads, but it's more efficient too.

Interestingly, Arm’s mop cache approach has been diminishing for a few years. The cache shrunk from 3,000 to 1,500 entries in the X3. Arm removed the mop cache entirely from the A715 when introducing smaller 64-bit only decoders, moving the instruction fusion mechanism into the instruction cache to enhance throughput. It appears Arm has taken the same approach here with the wider X4 core.

The Cortex-X4 has an improved back end too. Arm split one of the load/store units into dedicated load and store, allowing for up to four operations per cycle. There’s also a new L1 temporal data prefetcher and the option to double the L1 data TLB cache this generation. Combined with the larger L2 option (which doesn’t suffer any additional latency), Arm can keep more instruction close to the core for additional performance while also reading from distant memory less often. This all adds up to those healthy energy savings.

Arm Cortex-A720 deep dive

Sustained performance is hugely important for mobile use cases, so the energy efficiency of Arm’s middle cores has become increasingly important. The Cortex-A720 doesn’t mess with the existing formula too much (there’s no increase in width or depth here), preferring to optimize last year’s A710 core to eke out longer battery life.

There are a few changes to the inner core, though. In the out-of-order core, there’s now a pipelined FDIV/FSQRT unit (borrowed from the X4) to speed up those operations without an area impact. Similarly, faster transfers from NEON/SVE2 to integer units and earlier deallocation from Load/Store queues effectively boost their size without a physical area increase.

At the front end, there’s a lower 11-cycle branch mispredict penalty compared to 12 in the A715, and an improved design of 2-taken branch prediction that lowers power without impacting performance. The general reasoning is that less time spent on stalls is less power wasted.

Longer gaming sessions rely on power-efficient middle-cores like the A720.

Memory is also a big factor in power consumption, so Arm has spent time optimizing the A720 here too. You’ll find a new L2 spatial-prefetch engine (again distilled from the Cortex-X design), 9-cycle latency to access L2 (down from 10-cycles), and up to 2x the memset(0) instruction (a common operating system instruction) bandwidth in L2, which all further add up to improved power efficiency.

Arm always offers an element of configuration with its core designs, which usually involve various cache trade-offs. The company has gone further with the A720, offering a smaller area-optimized footprint option that fits into the same size as 2020’s Cortex-A78 while providing additional performance and ARMv9 security benefits. To accomplish this, Arm shrinks certain elements of the A720 design without stripping features out (think smaller branch predictor, as a thought experiment). This does incur a power efficiency penalty and isn’t particularly recommended for high-performance applications like smartphones. Instead, Arm expects to see this implemented in markets where silicon area is at an especially high premium.

Still, it’s an interesting idea and hints that we may see Arm’s silicon partners opt for additional variation within core clusters to further balance performance and energy efficiency needs. If you thought comparing SoCs was difficult already, just wait.

Arm Cortex-A520 deep dive

Much like the A720, Arm’s latest small core has been revamped to eke out those all-important performance-per-watt efficiency gains. Arm claims up to 22% better power efficiency than the A510. To this end, the Cortex-A520 actually slims down its execution capabilities this year, yet manages to claw back performance to still hand in 8% better average performance for the same power consumption.

Arm removed a third ALU pipeline from the Cortex-A520, but the core still has three ALUs in total. In other words, the A520 can only issue two ALU instructions per cycle, meaning that one ALU may be idle if it’s not already busy. This clearly has a performance penalty but saves on issue logic and result-storing power. Given Arm found performance improvements elsewhere, the trade-off balances out overall.

| Arm Cortex-A520 | Arm Cortex-A510 | Arm Cortex-A55 | |

|---|---|---|---|

Peak clock speed | Arm Cortex-A520 ~2.0GHz | Arm Cortex-A510 ~2.0GHz | Arm Cortex-A55 ~2.1GHz |

Decode Width | Arm Cortex-A520 3 instructions (2 ALU) | Arm Cortex-A510 3 instructions | Arm Cortex-A55 2 instructions |

Execution Units | Arm Cortex-A520 3x ALU 1x ALU/MAC/DIV 1x Branch | Arm Cortex-A510 3x ALU 1x ALU/MAC/DIV 1x Branch | Arm Cortex-A55 3x ALU 1x ALU/MAC/DIV 1x Branch |

L1 cache | Arm Cortex-A520 32KB / 64KB (assumed) | Arm Cortex-A510 32KB / 64KB | Arm Cortex-A55 16KB - 64KB |

L2 cache | Arm Cortex-A520 0KB - 512KB | Arm Cortex-A510 0KB - 512KB | Arm Cortex-A55 64KB - 256KB |

Architecture | Arm Cortex-A520 ARMv9.2 | Arm Cortex-A510 ARMv9 | Arm Cortex-A55 ARMv8.2 |

Merged-core option? | Arm Cortex-A520 Yes Shared NEON/SVE2 Shared L2 cache Private crypto optional | Arm Cortex-A510 Yes Shared NEON/SVE2 Shared L2 cache Private crypto optional | Arm Cortex-A55 No |

So where do these performance improvements come from? For one, the A520 implements a new QARMA3 Pointer Authentication (PAC) algorithm, which is particularly beneficial to in-order cores. It reduces the overhead hit from PAC security to <1%. Arm has also miniaturized aspects from its A7 and X series data prefetchers and branch predictors to a small core footprint, which helps with throughput.

Other important Cortex-A520 facts to note are that it’s a 64-bit only design. There is no 32-bit option, unlike last year’s A510 revision, and Arm noted that its Cortex-A roadmap is 64-bit-only from here on out. The option to merge two A520 cores into a pair with shared NEON/SVE2, L2 cache, and optional crypto capabilities to save on silicon area remains. Arm notes that merged and individual A520 cores can live in the same cluster.

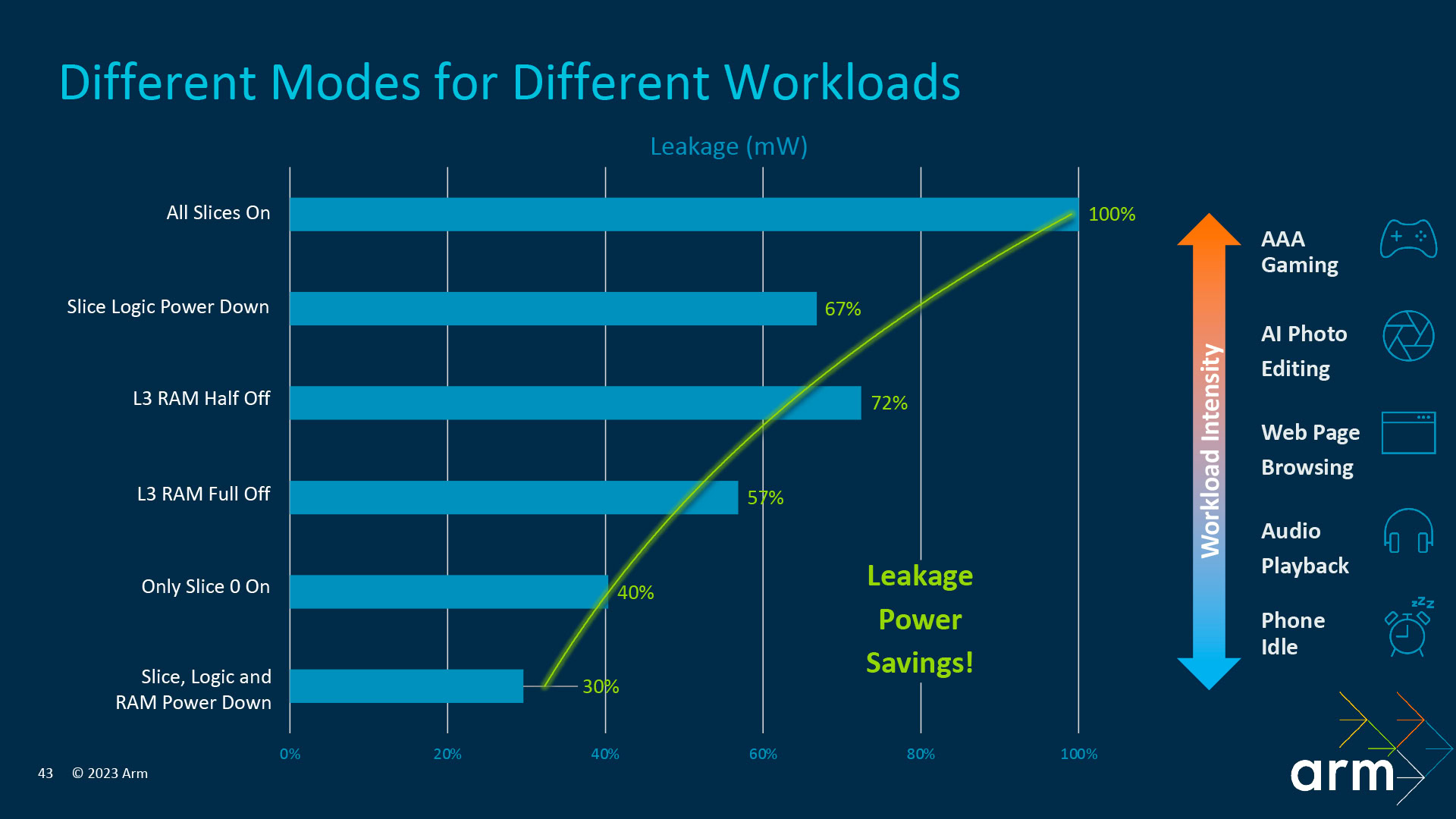

DynamIQ improvements to boot

Tying these cores together is a revamped DynamIQ Shared Unit (DSU) — the DSU-120. Headline features include support for up to 14 cores per cluster, up from 12 in the DSU-110. The shared L3 cache comes with new 24MB and 32MB configuration options, so up double last year’s cache size. That’s a boon for PC-class use cases that push Arm’s performance envelope.

In typical Arm fashion, the DSU-120 has also been optimized for power consumption. Leakage power (energy consumption lost during idle) is a big focus. The DSU-120 implements six different cache power modes, including L3 half-on, low power L3 data retention, slice logic power toggling, and individual slice power-downs. When CPU cores are put into a low-power state, the new DSU can also power off memory more flexibly. In terms of numbers, Arm boasts a 7% reduction in L3 dynamic power consumption and 18% less power consumption from cache misses.

Other changes include three ports for connecting to DRAM controllers, a second ACP port to double the bandwidth of high-performance accelerators connected to the cache, and a new cache capacity partitioning system that can reserve and limit the amount allocated to a specific task.

The key takeaway from Arm’s three CPU cores is, first and foremost, greatly improved power efficiency across the entire portfolio. And that’s before taking into account the benefits of next-gen manufacturing nodes. This is clearly good news for smartphone chipsets, where additional battery life is increasingly more important than additional performance. Sustained workloads, such as long gaming sessions, will definitely benefit from the more frugal Cortex-A720.

Arm’s latest CPU cores also cater to the growing interest in Arm-based PCs. This generation’s big performance gains are reserved for the hulking Cortex-X4 CPU, which, combined with higher core counts, is increasingly capable of demanding desktop-class workloads. We’ll have to see if the ecosystem partners decide to build new PC-grade Arm silicon this year.